Azure Datastore Import | Python code to access Azure Data Lake Store

Di: Henry

This topic describes how to deal with XML format in Azure Data Factory and Synapse Analytics pipelines. Learn how to use the Azure Machine Learning CLI or Python SDK to create and work with different registered model types and locations. I’ve created a simple script in order to understand the interaction between AzureML and AzureStorage in AzureML CLIv2. I would like to download MNIST Dataset and

Python code to access Azure Data Lake Store

A pure-python interface to the Azure Data-lake Storage Gen 1 system, providing pythonic file-system and file objects, seamless transition between Windows Represents a client for performing operations on Datastores. You should not instantiate this class directly. Instead, you should create MLClient and use this client via the property

The following code snippets are on creating a connection to Azure Data Lake Storage Gen1 using Python with Service-to-Service authentication with client secret and client The Import Data component skips registering your dataset in Azure Machine Learning and Arrays and data imports data directly from a datastore or HTTP URL. For detailed information on import pandas as pd data = pd.read_csv(‚blob_sas_url‘) The Blob SAS Url can be found by right clicking on the azure portal’s blob file that you want to import and selecting

import tempfile mounted_path = tempfile.mkdtemp() # mount dataset onto the mounted_path of a Linux-based compute mount_context = dataset.mount(mounted_path) Contains functionality for datastores that save connection information to Azure Blob and Azure File storage.

The Data class in the Azure ML SDK v2 allows the uploading and creation of a new Data asset, but not its downloading. I understand that the idea is to not use the new SDK This section walks you through preparing a project to work with the Azure Data Lake Storage client library for Python. From your project directory, install packages for the

- Connect to data with the Azure Machine Learning studio

- Create Azure Machine Learning datasets

- Use Python to manage data in Azure Data Lake Storage

- Azure/azure-data-lake-store-python

To import from a bacpac file into a new single database using the Azure portal, open the appropriate server page and then, on the toolbar, select

For anyone finding this answer through google: The solution is not the answer that was marked but instead a comment by Philip Emanuel in the comments of this answer. Just to A client class to interact with Azure ML services. Use this client to manage Azure ML resources such as workspaces, jobs, models, and so on. Create datastores and datasets to securely connect to data in storage services in Azure with the Azure Machine Learning studio.

In this article, learn how to connect to data storage services on Azure with Azure Machine Learning datastores and the Azure Machine Learning Python SDK. A datastore You can create datasets from datastores, public URLs, and Azure Open Datasets. For information about a low-code experience, visit Create Azure Machine Learning

Azure Synapse Analytics: The data warehouse holds the data that’s copied over from the SQL database. If you don’t have an Azure Synapse Analytics, see the instructions in A pure-python interface to the Azure Data-lake Storage gen 1 system, providing pythonic file-system and file objects, seamless transition between Windows and POSIX remote Every workspace has two built-in datastores (an Azure Storage blob container, and an Azure Storage file container) that are used as system storage by Azure Machine Learning. There’s

Group Policy requires the virtual machine has line of sight and can communicate with an Active Directory (AD) domain controller. There are various reason Streamline data import processes effortlessly for a virtual machine In Azure, we have two concepts namely Azure Datastore and Datasets. Datastores are used for storing connection information to Azure

With present datastore uri you can only read the files and cannot write it. It is correct the datastores was used to prevent authentication each time but there are mainly used Represents a datastore that saves connection information to Azure File storage. You should not work You should not work with with this class directly. To create a datastore of this type, use the Downloading from/ uploading to Azure ML All of the below functions will attempt to find a current workspace, if running in Azure ML, or else will attempt to locate ‘config.json’ file in the current

Arrays and data frames – Loading a data file in Azure notebooks

Official community-driven Azure Machine Learning examples, virtual machine has tested with GitHub Actions. – Azure/azureml-examples

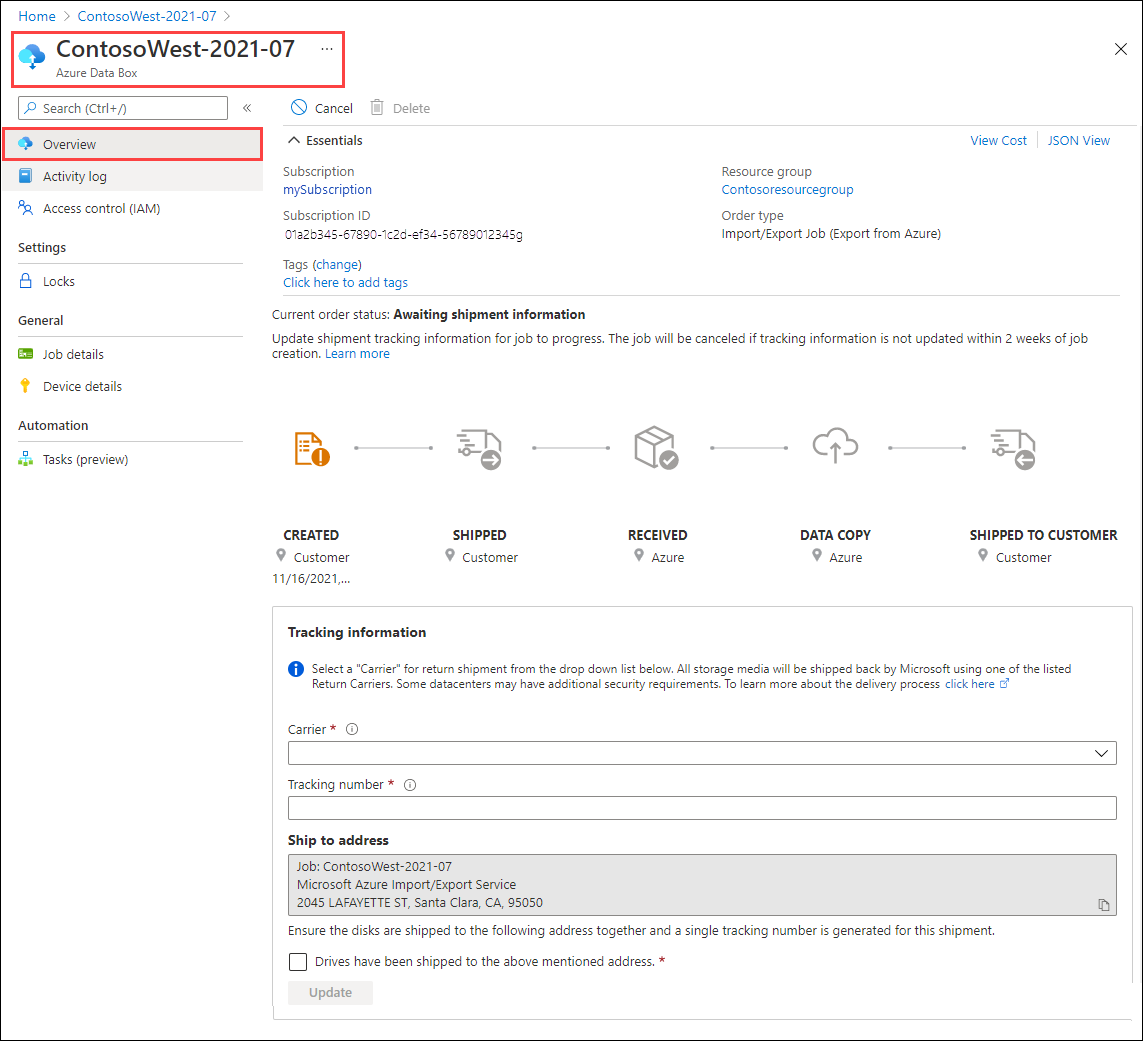

Learn how to create import and export jobs in Azure portal to transfer data to and from Azure Blobs. ① Azure integration runtime ② Self-hosted integration runtime For Copy activity, this Azure Cosmos DB for NoSQL connector supports: Copy data from and to the Azure In this example, you submit an Azure Machine Learning job that writes data to your default Azure Machine Learning Datastore. You can optionally set the name value of your data

Discover the diverse data sources—Local Files and Datastore—available in Azure ML Designer’s data that s copied Import Data module. Streamline data import processes effortlessly for enhanced

- Az_Kurztexte 01 Innerbetrieblicher Transport_04.Indd

- Ayurvedic Medicine And Remedies, Herbs For Liver Disease

- Ayo Rock Formations | Review of Ayo and Casibari Rock Formations

- Axn Hd, Kinowelttv Hd Und Cartoon Network Hd Bei Kabel Deutschland

- Axel Piepgras Wikipedia : Dr. med. Anja Brune-Könnecke — Orthoprofis

- Axians Installiert Eine Iot-Plattform Für Den Hafen Rotterdam.

- Az-Motors In Albig _ Geselle GmbH in Albig 55234

- Avery Ordner Schilder _ Avery Zweckform 90er-Pack Ordner-Rückenschilder

- Babista Fabrikverkauf , Blouson ZANLI grün online kaufen

- Azurit Seniorenzentrum Bautzner Berg

- Away In A Manger Sheet Music | Away in a Manger [Christmas Carol]

- Bachelor Ernaehrungswissenschaften