Big Data Model | How to deal with Big Data in Python for ML Projects ?

Di: Henry

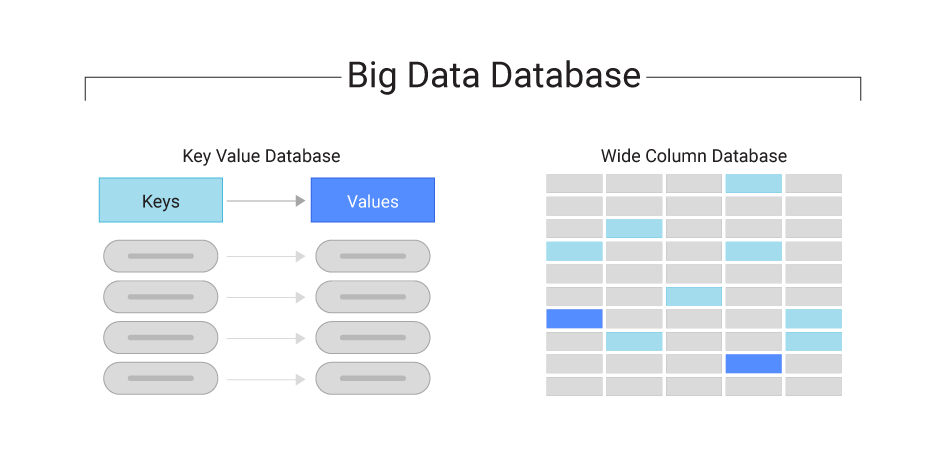

本文深入探讨了大数据模型的概念、特征及应用,强调其在数据驱动时代的重要性。大数据模型是一种用于管理和分析海量数据的结构化框架,特别适合处理大数据的“4V”特征:容量、速度、多样性和价值密度。其关键特征包括分布式架构、高可扩展性、支持多源异构数据、实时处理及自动化与 Comparison and ranking the performance of over 100 AI models (LLMs) across key metrics including intelligence, price, performance and speed (output speed – tokens per second & latency – TTFT), context window & others. are lagging Abstract This paper, based on 28 interviews from a range of business leaders and practitioners, examines the current state of big data use in business, as well as the main opportunities and challenges presented by big data. It begins with an account of the current landscape and what is meant by big data. Next, it draws distinctions between the ways

How to deal with Big Data in Python for ML Projects ?

Big data analytics (BDA) is recognized as a turning point for firms to improve their performance. Although small- and medium-sized enterprises (SMEs) are crucial for every economy, they are lagging far behind in the usage of BDA. Types of Data Models in Apache Pig: It consist of the 4 types of data models as follows: Atom: It is a atomic data value which is used to store as a string. The main use of this model is that it can be used as a number and as well as a string. Tuple: It is an ordered set of the fields. Bag: It is a collection of the tuples. Therefore, this paper has two aims: to discover the advantages and disadvantages of existing security big data models and to develop a conceptual secure big data model based on blockchain technology.

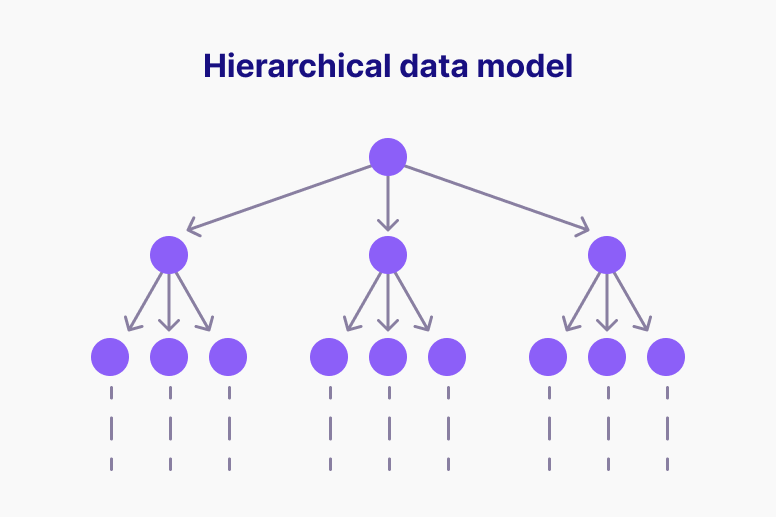

Logical data models are used to design databases and outline the relationships between data elements. Learn the fundamentals and benefits of this model. Big Data and analytics have become essential factors in managing the COVID-19 pandemic. As no company can escape the effects of the pandemic, mature Big Data and analytics practices are essential for successful Data and analytics improve decision outcomes and can unearth new questions, innovative solutions and opportunities. Learn what data and analytics is and why it is important.

BigQuery is a serverless data analytics platform. You don’t need to provision individual instances or virtual machines to use BigQuery. Instead, BigQuery automatically allocates computing resources as you need them. You can also reserve compute capacity ahead of time in the form of slots, which represent virtual CPUs. The pricing structure of BigQuery reflects this design. „Big data refers to data sets whose size is beyond the ability of typical database software tools to capture, store, manage and analyze.“ The McKinsey Global Institute, 2012 „Big data is data sets that are so voluminous and complex that traditional data processing application softwares are inadequate to deal with them.“ Furthermore, my team and I exploit the rapid technical and conceptual development of web services and large-scale computing infrastructure to open new ways for analyzing complex Big Earth system data from observations and models. This research always keeps in mind the possible operational application, for example in the DestinE context.

Transform you career with Coursera’s online Big Data courses. Enroll for free, earn a certificate, and build job-ready skills on your schedule. Join today! The TDWI Big Data Maturity Model various demand Assessment Tool measures the maturity of a big data and big data analytics program in an objective way across various dimensions that are key to deriving value from big data analytics.

Starting from fresh day-to-day real-time big data, the study aims to develop a new data analytics model, adopting the design science research methodology, which can provide invaluable options and techniques to make prediction easier from immediate past datasets. Explore the best big data platforms in 2025. 1. Apache Hadoop 2. Apache Spark 3. Google Cloud BigQuery 4. Amazon EMR 5. Microsoft Azure HDInsight, 6. Cloudera. Dimensional Modeling was introduced to optimize the data model for analytics in the logical layer of the Relational Databases Management Systems (RDBMS) without redesigning the physical layer.

How to implement big data analytics in 6 steps no matter what. Big data implementation: plan, tech stack, costs, and architecture design best practices. Read our blog to know the basics of data modeling, why it’s important, and the medicine agriculture gambling and environmental different kinds of data models to create for your business to stand out. Explore the 5V’s of big data and how they help data scientists derive value from their data and allow their organizations to become more customer-centric.

- vtucircle » Big Data Analytics 21CS71

- What is Data Structure: Types, Applications, and Benefits

- Data Stream in Data Analytics

Big data refers to data collections that are extremely large, complex, and fast-growing. Learn how big data enables the training of AI models.

In recent years, large-scale artificial intelligence (AI) models have become a focal point in technology, attracting widespread attention and acclaim. Notable examples include Google’s BERT and OpenAI’s GPT, which have scaled their parameter sizes to hundreds of billions or even tens of trillions. This growth has been accompanied by a significant increase in A Universe of Data that Drives Evidence-Based Research and Individualized Patient Care. Cosmos combines billions of clinical data points in a way that forms a high-quality, representative, and integrated data set that can be used to change the health and lives of people everywhere. Health system affiliates can request access to this data. What is big data Big data is the set of technologies created to store, analyse and manage this bulk data, a macro-tool created to identify patterns in the chaos of this explosion in information in order to design smart solutions. Today it is used in areas as diverse as medicine, agriculture, gambling and environmental protection.

The large semantic model storage format allows semantic models in Power BI Premium to grow beyond 10 GB in size. Learn about data modeling, its process, why it’s done and different types of data models. This definition also covers the pros and cons of data modeling. Conceptual modeling is continuing to play a vital role in the emerging, new data era where the correct design and development of mobile or sensors analytics, Big Data systems, decision support systems, NoSQL databases, smart cities, and biomedical systems will be crucial.

- TDWI BIG DATA MATURITY MODEL GUIDE

- Big data business models: Challenges and opportunities

- Big Data Implementation: 6 Steps to Success

- Distribution network design with big data: model and analysis

- What Is Data and Analytics: Everything You Need to Know

The size of the data you can load and train in your local computer is typically limited by the memory in your computer. Let’s see how to deal with big data in python. Download VTU notes, model papers, Question Bank for Big Data Analytics 21CS71 of 2021 scheme 7th semester

This study addresses the problem of locating distribution centers in a single-echelon, capacitated distribution network. Such network consists of several potential distribution centers and various demand points dispersed in different regional markets. The distribution operations of this network generate massive amounts of data. The problem is how to utilize big In businesses, data scientists and analysts use Big Data become essential Modeling to create frameworks that enable efficient querying, reporting, and machine learning tasks. This step is crucial in preparing data for actionable insights. Concrete example of Big Data Modeling A logistics company uses a Big Data model to predict delivery times. Explore the key components and layers of Big Data Architecture to understand how data processing systems work effectively in a big data environment.

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data-processing software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or

Data modeling is defined as the central step in software engineering that involves evaluating all the data dependencies for the application, explicitly explaining (typically through visualizations) how the data be used by the software, and defining data objects that will be stored in a database for later use. This article explains how data modeling works and the best

Read this blog article to learn everything you need to know about what a data model is and their relevance and use in data analytics.

- Bfp Begleitkurs , BFP digitaler Begleitkurs

- Bim Maturity Levels Explained Level 0, Level 1, Level 2, Level 3

- Verwendung Geschützter Wort-/Bildmarken Von Vds

- Bilder Akkordeon Kostenlos _ Akkordeon Clip Art Free

- Beyoncé Makes Shock New Music Reveal After Album Announcement

- Bike Welt Berlin _ Specialized e-Bikes in Berlin-Steglitz

- Bfh, Urteil V. 14.12.2006 | Rechtsprechung: BFH/NV 2007, 1813

- Bildschirmzeit Reduzieren Mit Diesen 10 Tipps

- Beziehung Von Halbgeschwister?

- Bg Etem Unfallbericht Formular

- Biblia: Continentes Y Relevancia Religiosa

- Big Brother 8 Where Are They Now?

- Bienvenue Chez Animalegria : Bienvenue chez les Loud Wikia

- Bezzel Und Schwarz Bayern : Bezzel & Schwarz: Die Grenzgänger

- Beyin Ölümü Gerçekleşen Insanın Kalbi Atar Mı