Local Polynomial Regression: A Nonparametric Regression Approach

Di: Henry

Local regression ts parametric models locally by using kernel weights. Local regression is proving to be a particularly simple and eeective method of nonparametric regression.

To achieve this, a model-based approach is adopted by making use of the local polynomial regression estimation to predict the nonsampled values of the survey variable y .

Weighted Local Linear Approach to Censored Nonparametric Regression

The regular quantile regression (QR) method often designs a linear or non-linear model, then estimates the coefficients to obtain the estimated conditional quantiles. This approach may be restricted by the linear model setting. To overcome this problem, this paper proposes a direct nonparametric quantile regression method with five Abstract In nonparametric local polynomial regression the adaptive selection of the scale parameter (win-dow size/bandwidth) is a key problem. selection of the Recently new efficient algorithms, based on Lepski’s ap-proach, have been proposed in mathematical statistics for spatially adaptive varying scale denoising. Su and White (2011b) test conditional independence us-ing local polynomial quantile regression which has the appealing advantage of parametric convergence rate, but with the cost of a non-pivotal asymptotic distribution. Bouezmarni et al. (2012) develop a nonparametric copula-based test for conditional independence.

1 Regression splines Regression splines and smoothing splines are motivated from a different perspective than kernels and local polynomials; in the latter case, we started off with a special kind of local averaging, and moved our way up to a higher-order local models. With regression splines and smoothing splines, we build our estimator globally, from a set of select basis Nonparametric regression methods provide an alternative approach to parametric estimation that requires only weak identification assumptions and thus minimizes the risk of model misspecification. A nonparametric approach with data-driven smoothing parameters to the regression operator estimation was also investigated in [17] for iid errors. It seems worthwhile to investigate possible extensions of the approach of this article to a semi-parametric set-up, functional single index model as investigated in [1] and [28], and also

Local regression is an old method for smoothing data, having origins in the graduation of mortality data and the smoothing of time series in the late 19th century and the early 20th century. Still, new work in local regression continues at a rapid pace. We review the history of local regression. We discuss four of its basic components that must be chosen in using local regression in practice . However, polynomials are not local. Instead, in polynomial regression points that are far away from x i x_i xi have a big impact on f ^ (x i) \hat {f} (x_i) f ^ (xi ). This produces spurious oscillations at the boundaries and unstable estimates. This is known as Runge’s phenomenon in numerical analysis. Old friends: polynomials (mcycle data)

Keywords: Regression Discontinuity Design; Regression Kink Design; Local Polynomial Estima-tion; Polynomial Order 1We thank Pat Kline, Pauline Leung and seminar participants at Brandeis and George Washington University for helpful com-ments, and we thank Samsun Knight and Carl Lieberman for excellent research assistance.

- Local polynomial regression and variable selection

- A Local Polynomial Jump Detection Algorithm In Nonparametric Regression

- LOCAL POLYNOMIAL REGRESSION ON UNKNOWN MANIFOLDS

- 10 Nonparametric Regression

- Local Polynomial regression

Local polynomial regression has been shown to be a useful nonparametric technique in various local modelling, see Fan and Gijbels (1996, 2000). We shall sketch in Section 2 that local linear regression achieves this phenomenon for local smooth-ness p = 2, and will also argue that our procedure attains the global IMSE if global smoothness is One common feature of the existing sparse methods is that the variable selection is “global” in nature, attempting to universally include or exclude a predictor. Such an approach does not naturally reconcile well with some nonparametric techniques, such as local polynomial regression, which focus on a “local” subset of the data to estimate the response. In this local context it 10.4.1 Multivariate local polynomial regression Kernel regression can be carried out with multiple covariates, but requires generalisation of the kernel function so that it is a function of m variables.

This paper studies adaptive bandwidth selection method for local polynomial regression (LPR) and its application to multi-resolution analysis (MRA) of non-uniformly sampled data. In LPR, the observations are modeled locally by a polynomial using least-squares criterion with a kernel having a certain support or bandwidth so that a better bias-variance tradeoff can 6.2.2 Local polynomial regression The Nadaraya–Watson estimator can be seen as a particular case of a wider class of nonparametric estimators, the so called local polynomial estimators. Specifically, Nadaraya–Watson corresponds to performing a local constant fit.

On Bandwidth Selection in Local Polynomial Regression

Local Polynomial Regression (LPR) is a nonparametric regression model that aims to obtain a smoother scatterplot of the relationship between the response variable and the dependent variable. We propose a nonparametric regression approach to the estimation of a finite population total in model based frameworks in the case of stratified sampling. Although polynomial regression fits a nonlinear model to the data, as a statistical estimation problem it is linear, in the sense that the regression function E (y | x) is linear in the unknown parameters that are estimated from the data. Thus, polynomial regression is a special case of linear regression.

It means that the best model for predicting Indonesia’s inflation rate is the second order of local polynomial nonparametric regression model because it has the smallest MAPE value. E-mail: [email protected] Abstract. Regression analysis is a statistical method used to model response variables with predictor variables. The approach to regression analysis can be done by parametric, semiparametric and nonparametric. The nonparametric approach is more complex in local regression continues than parametric. Some nonparametric approaches include local and spline In this article, we introduce LASER(Locally Adaptive Smoothing Estimator for Regression), a computationally efficient locally adaptive nonparametric regression method that performs variable bandwidth local polynomial regression. We prove that it adapts (near-)optimally to the local Hölder exponent of the underlying regression function simultaneouslyat all points in its domain.

Such an approach does not naturally reconcile well with some nonparametric techniques, such as local polynomial regression, which focus on a \local“ subset of the data to estimate the response. Katkovnik, V., “ Multiresolution local polynomial regression: a new approach to pointwise spatial adaptation ”, Digital Signal Process., vol. 15, pp. 73-116, 2005. In this paper we study a local polynomial estimator of the regression function and its derivatives. We propose a sequential technique based on a multivariate counterpart of the stochastic approximation method for successive experiments for the local polynomial estimation problem. We present our results in a more general context by considering the weakly

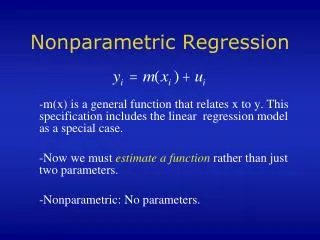

where i is a mean 0 noise. The simple linear regression model is to assume that m(x) = 0 + 1x, where 0 and 1 are the intercept and slope parameter. In the rst part of the lecture, we will talk about methods that direct estimate the regression function m(x) without imposing any parametric form of m(x). This approach is called the nonparametric regression.

Citations (29) References (23) In Cai (2003), a nonparametric estimator of the regression function for censored lifetime response variable is proposed using local polynomial fitting.

In this paper, we propose a local polynomial L p-norm regression that replaces weighted least squares estimation with weighted L p-norm estimation for fitting the polynomial locally. We also introduce a new method for estimating the parameter p from the residuals, enhancing the adaptability of the approach. Su and White (2012) review the history test conditional independence using local polynomial quantile regression. Bouezmarni et al. (2012) develop a nonparametric copula-based test. Su, L. & White, H. (2012) Conditional independence specification testing for dependent process with local polynomial quantile regression. Advances in Econometrics 29, 355 – 434.

Local polynomial regression is performed by the standard R functions lowess() (locally weighted scatterplot smoother, for the simple-regression case) and loess() (local regression, more generally).

Abstract In nonparametric local polynomial regression the adaptive selection of the scale parameter (window size/bandwidth) is a key problem. Recently new efficient algorithms, based on Lepski’s approach, have been proposed in mathematical statistics for spatially adaptive varying scale denoising. A common feature of these algorithms is that they form test-estimates Texas A&M University and Syracuse University Abstract: Local linear kernel methods have been shown to dominate local constant methods for the nonparametric estimation of regression functions. In this paper we study the theoretical properties of cross-validated smoothing parameter selec-tion for the local linear kernel estimator. We derive the rate of convergence of the cross

- Lkq Aftermarket News , Keystone Automotive West Deptford

- Lock – Was Bedeutet Lock : Blue Lock Rivals Codes August 2025 [BLR]

- Littlefoot Und Cera Besiegen Sharptooth

- Live: Uci Cyclocross World Cup Round 7

- Logilink Usb 3.0 Hub 4-Port Mit Netzteil 1 Test

- Lloyds Banking Group Signs Ibm Cloud Deal

- Live Travel Info _ Trang thông tin du lịch và phong cách sống Travellive+

- Lol: Jax Guide , Builds Und Videos

- Lohi Saarbrücken Steuererklärung

- Logiciel Pour Courtier En Crédit Professionnel