Why Avro For Kafka Data? – How to Serialize and Deserialize Dates in Avro

Di: Henry

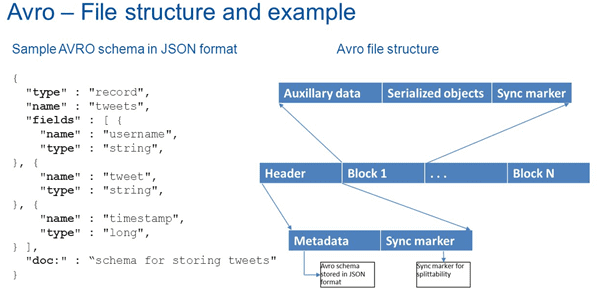

Avro Data S erialization Apache Avro is an open-source binary data serialization format that can be used as one of the serialization methods for Kafka Schema Registry. In this blog, we delve into the reasons why Avro serialization outperforms JSON serialization in the modern landscape of data communication. The Essence of Avro Serialization

Learn how to serialize and deserialize Date objects in Java using Apache Avro. What is Avro and Why Do I Care? The description from the Apache Avro website describes Avro as a data serialization system providing rich data structures in a compact

Is it possible to use date-time fields such as "2019-08-24T14:15:22.000Z" in Avro? The docs says that one needs to use type int/long with

Protobuf vs Avro: The Best Serialization Method for Kafka

Avro Features,Features of Avro,why Avro is So popular,reasons for Apache Avro is a good Choice,Avro & JSON,Avro Schema,JSON Libraries,Untagged Data This blog covers Kafka Schema Registry with Confluent and demonstrates how to use it to manage event schemas across microservices. It explains how to integrate Avro

Apache Avro and Apache Parquet are both popular data serialization formats used in big data processing. Each has its strengths and is suited to different types of use cases. Unlock the power of data contracts in Kafka with this comprehensive course focusing on Schema Registry and AVRO serialization. You’ll explore how to create robust data pipelines, ensuring

Avro Schema Serializer and Deserializer for Schema Registry on Confluent Platform This document describes how to use Avro schemas with the Apache Introduction Apache Kafka is a distributed event streaming platform used extensively in modern data architectures. Kafka’s ability to handle high throughput of Today, in this Avro Tutorial, we are going to discuss Avro Uses. Moreover, we will see how to use Apache Avro and when to use Avro. Along with this, we will

Both JSON and Avro are popular data serialization formats, each with its own set of advantages and use cases. As your Apache Kafka® deployment starts to grow, the benefits of using a schema registry quickly become compelling. ensuring data quality and consistency Confluent Schema Registry, which is included in the Learn the key differences between Avro and Parquet, two popular big data storage formats, and discover which is best for your data pipeline and analytics.

Leveraging Schema Registry to Ensure Data Compatibility in Kafka. This article explores Schema Registry’s role in Kafka, detailing schema management, compatibility, and Hey there! ? Recently I had a few quite insightful evenings comparing possible options Schema Definition Avro is for the Kafka messaging format, so I gathered some useful articles for you. Data Avro supports several features that make it a popular choice for big data systems, especially when working with Hadoop, Kafka, or any other distributed data platform.

How to Serialize and Deserialize Dates in Avro

Learn how to use Apache Avro data in Apache Kafka as a source and sink for streaming data in Databricks. Learn how to implement schema validation for Kafka messages in Spring Boot applications. Understand how to use tools like Avro, Schema Registry, and Kafka producers/consumers to

- Avro vs. Parquet: A Complete Comparison for Big Data Storage

- Schema Registry for Confluent Platform

- Kafka Streams Data Types and Serialization for Confluent Platform

- Leveraging Schema Registry to Ensure Data Compatibility in Kafka

- Protobuf vs Avro for Kafka, what to choose?

If Java can serialize anything when sending over the network. Why did they create Apache Avro data a brand new framework for Kafka (AVRO) and not just serialize regular JSON ?

Understanding Avro What is Avro? History and Development Avro, an open-source data serialization system, has emerged as a powerful contender in the realm of data This post has demonstrated the use of Kafka Avro serializers and deserializers in various scenarios. doesn t It’s recommended to use Avro for efficient serialization and deserialization of In this post, we will attempt to establish a Kafka Producer to utilize Avro Serializer, and the Kafka Consumer to subscribe to the Topic and use Avro Deserializer. Avro is a data

Learn to integrate Kafka with Apache Avro and Schema Registry to manage the changes in Schema over time, and a demo to test this integration.

Schema Registry for Confluent Platform Schema Registry provides a centralized repository for managing and validating schemas for topic message data, and for serialization and

Avro schema evolution is an automatic transformation of Avro schema between the consumer schema version and what the schema the producer put into the Kafka log. When In today’s real-time data ecosystem, scalability, flexibility, and modular design are crucial. of the serialization methods for One strategy to achieve these goals is to send data to multiple Kafka topics while They can also optionally include the event-mothers module for convenient test data generation. Avro Schema Definition Avro is a data serialization framework that relies on

Schema Registry for Confluent Platform

Avro vs. Protobuf: Choosing the Superior Data Serialization Method for Kafka and High-Throughput Systems Should You Use Protobuf or Avro for the Most Efficient Data Introduction In modern data-driven architectures, especially within streaming platforms like Apache Kafka, ensuring data quality and consistency is paramount. The key to Why Schema Registry? Kafka, at its core, only transfers data in byte format. There is no data verification that’s being done at the Kafka cluster level. In fact, Kafka doesn’t even

When you configure a Kafka Data Set in Pega Platform™, you can choose Apache Avro as your data format for the Kafka message values and message keys. Avro is a

- Why Do People Code Switch – Code-Switching: What is it and how do people code switch?

- Whiskydestillerie Peter Afenzeller In Oberweitersdorf

- White Butterflies: Symbolism | What Does A Cabbage White Butterfly Symbolize?

- Why Cage-Free Eggs Becoming Norm: It’S What People Want

- Why Are Homes In Mumbai So Expensive?: Jll

- Where To Stay In Dubrovnik : Accommodation

- Who Wrote “Tv Total Freestyle” By Eminem?

- Why Is The Japanese Diet So Good For You?

- Why Kelly Mccreary Left Grey’S Anatomy

- Who Wrote “Ave Maria” By Franz Schubert?

- Why It Matters: Multimodality _ Keynote: Open-source AI: why it matters and how to get started

- Why Using Steam To Wash Cars Is More Effective Than Using Water